Previously I mentioned that a kubernetes instance manages a set of nodes connected by a network. For now consider that the nodes all can talk to each other and the out side world.

One of these or a set of these nodes will be used to run Kubernetes Control Applications.

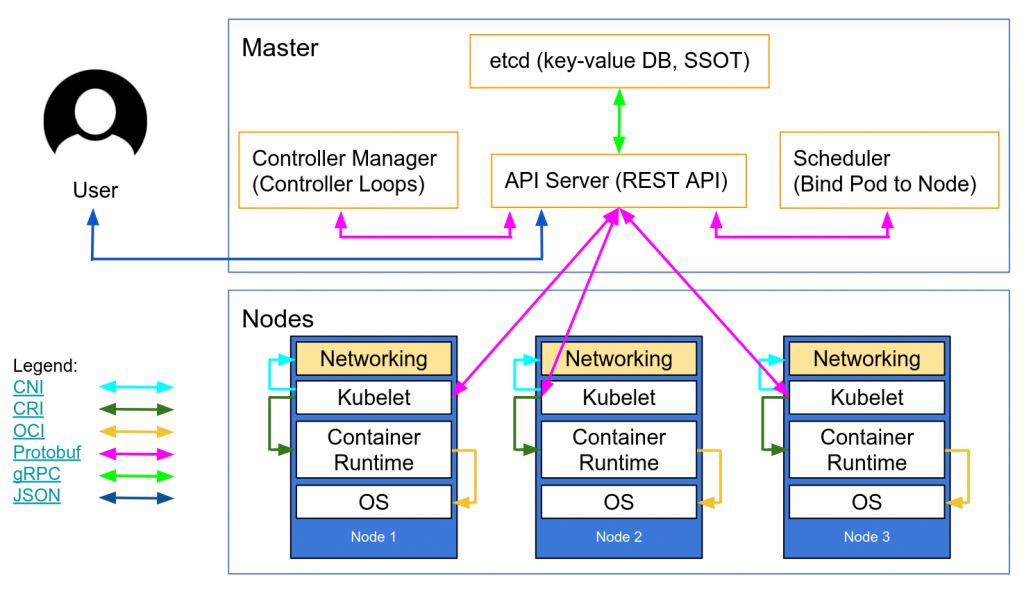

The control plane is the brains of the system, it figures how to optimally run pods on other non-master nodes. It sends commands to these nodes to create and delete pods and other resources. The other nodes constantly ping the master node to register their state and also for other information. If a node goes incommunicado the control plane immediately reschedule all the pods that were running on that node to other nodes.

There is an application called a Kubelet running on each node which communicates with the master node through the API server pod.

Master nodes can run a kubelet and container run time and run pods as well, but its not advisable. If this “other” pod misbehaves, your whole cluster could go down. Most managed kubernetes services dont give you access to the master node at all.

The current state of the system (all the created resources etc) is stored in an etcd instance. Master node can have High Availability as well. You can have multiple masters sharing the same etcd instance. The api-server is also designed to be horizontally scalable.

Kubectl Client

The Kubectl client talks to the control plane by hitting APIs on the API server of the control plane on the master. To create pods etc it sends a Resource Definition (a json) to the API server which saves the resource in etcd, decides where it will deploy it (on which node) and sends commands to the kubelet it has chosen to run the pod or whatever.

In a managed cluster there will be some security around who can access the API server pod. GCP does this with access code and secret. Another option that GCP provides you is a private cluster where all non-master nodes only have private IPs and also whitelisted master node where you can specify only specific IPs can access the master node.

Versions

I am not sure why, but a master node can run a different version than the non-master node. Usually the master node will be leading the non-master node Kubernetes version. To see the versions you can run kubectl get nodes -o yaml command.

kubeProxyVersion: v1.17.3

kubeletVersion: v1.17.3Above is for minikube, but in a real kubernetes cluster like on GCP you should see something like

kubeProxyVersion: v1.15.90-gke.8

kubeletVersion: v1.15.9-gke.8in GCP you dont have access to the master node but you can get it directly from UI. Look for the details of your kube cluster.

Managed Cluster Providers usually keep patching their versions and its good to upgrade along with them. The default behaviour in GCP is that they will force upgrade you. Unless you design your system well, your services may go down.

Master upgrade or Node upgrade are things you need to get used to, we will look at what happens when master upgrade or node upgrade happens in a later post.

It maybe tempting to consider that Nodes are the Strong pillars of the system and Pods are less reliable and should be designed to be killed. This is actually wrong. Kubernetes was designed to be able to handle Nodes going down as well. To check if you have designed your services properly it may be a good idea to randomly kill pods and nodes.